Abstract

This report intends to analyze and discuss the organ of the ear from a biomolecular, cellular and tissue engineering perspective. To understand the general function of the ear from such a perspective, a focus is first placed on mammalian ears, more particularly on human ears, with the objective of illustrating basic cellular functions common to most mammals. Focus is then shifted to the inner ear of the bat and how this animal makes use of echolocation for spatial analysis in its dark environment. Furthermore, the discussion continues with an analysis on the obstacles humans may encounter with echolocation and how some humans may use echolocation principles to map out the world around them.

Introduction

To properly absorb and perceive sound, the mammalian ear has various specialized tissues. Such components of the ear have been developed over extensive periods of evolution which have ultimately led to a maximization of the organ’s efficiency. Moving farther into the inner ear, these cells, tissues and design components characterizing the mammalian ear increase not only in complexity, but as well as in sensitivity. However, it is not all mammals that have followed the same pathways of evolution. Due to varying environments. certain auditory systems have become increasingly complex to the point where these animals are capable of accomplishing supernatural-esque actions. For instance, visualizing surrounding environments through sound is what bats, among other animals, may do with the help of echolocation. Understanding how such concepts work cannot only lead to great breakthroughs in human technological developments, but it can also forever influence and modify the way humans interact and make use of their own auditory senses.

Cellular, Tissue and Design Components of Mammalian Ears

The Outer Ear

In terms of cellular and tissue complexity, the outer ear is the simplest part of the ear, which is fitting as it is the most susceptible to damage and stress. The primary role of the outer ear is to capture sound that is transmitted towards it, and properly channel that sound towards the inner parts of the ear where it can begin to be perceived. Under the skin of the outer ear, there is the auricular cartilage which gives the ear its look. This cartilage, known as elastic cartilage, serves to maintain the shape of the ear while maintaining its flexibility. It is also a unique component of the body as it contains no blood vessels nor nerve cells (“Auricular Cartilage” | Healthline Editorial Team, 2015). The degrees of inclination and angles of attachment of the auricular cartilage – hence the shape of the outer ear – play a significant role in the ears ability to localize the direction of sound (Abdalla, 2017). For sound to propagate directly into the ear canal, the source would have to be parallel from the ear and therefore sound will almost never enter the ear canal directly. Depending on the location of the sound, it will be reflected off one of the many folds caused by the auricular cartilage and into the middle ear. These folds help maximize the angles from which sound can enter the ear, and based off time delay, angle of transmission, and several other factors, humans may differentiate sound location.

The Middle Ear

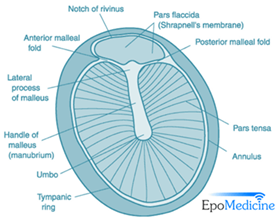

As sound propagates down the ear canal, it will reach the tympanic membrane, also known as the ear drum, which forms a boundary between the outer and middle ear. The tympanic membrane is a semitransparent, slightly concave oval membrane, with a diameter of 8-10 mm and a thickness of around 0.1 mm (“Applied Anatomy of Tympanic Membrane” | Epomedicine, 2013). The tympanic annulus, an incomplete ring of bone which almost encircles the tympanic membrane, holds it in place as the membrane is thickened and attached to a groove in it. The pars flaccida is slack though the larger component, the pars tensa, is tightly stretched allowing for sensitive vibration. The umbo, stretching down the center of the tympanic membrane, is the point of attachment of the malleus, allowing the vibrations captured by the membrane to be transmitted mechanically to the auditory ossicles (Hawkins, 2020). Unlike the more outer ear components, the membrane is supplied with blood vessels as well as sensory nerve fibers, making it sensitive to pain and overstimulation.

Through electron microscope analysis, three distinct layers were recognized of the tympanic membrane. These layers consist of an outer epidermal layer, the lamina propria – found in the middle – and an inner mucous layer. In the pars flaccida, the middle layer is composed of loose connective tissues with a large amount of elastic and collagen fibers. Alternatively, in the much thinner pars tensa, the middle lamina propria consists of outer radial and inner circular fibers. This middle layer accounts for the primary difference of texture and the way both will interact with sound (Lim, 1970).

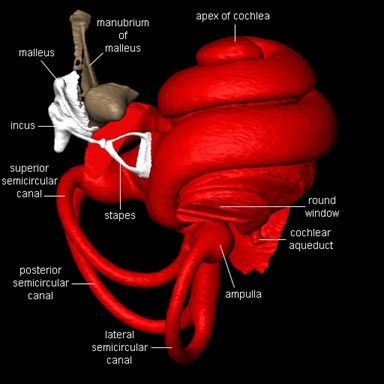

Following the tympanic membrane in the middle ear are the auditory ossicles – the smallest bones in the body – the malleus, incus, and stapes. With the smallest of the latter being the stapes, these bones suspended by ligaments serve to augment the force of the vibration of the tympanic membrane. This apparatus serves to overcome the future challenge of getting airborne vibrations into the higher-pressure fluid-filled inner ear, as without these bones only around 0.1 % of sound energy would reach the inner ear (Vetter, 2008). Fig. 3 represents a simplified diagram created through a study analyzing the mechanics of the auditory ossicles. The black dots represent pivots fixed to the walls of the tympanic cavity through ligaments, and the open circles represent free pivots that attach the bones to each other but not to the tympanic cavity. Beginning with the force placed on the malleus from the umbo, the force is amplified through the lever gain in the ratios of the distances a/b as well as c/d. The total “mechanical advantage” or force gain from these levers is approximately an increase of force of a factor of 13 as stated in the experiment (Guelke and Keen, 1952).

The Inner Ear and Cochlea Architecture

The organ of the inner ear fulfills two main functions: translating the physical vibrations into electrical impulses that the brain can analyze as sound and maintaining equilibrium. The cochlea, derived from the Latin word for snail, share similar structures in all mammals. It consists of a coiled labyrinth which resembles the shape of a snail, embedded in the temporal bone of the skull (Elliott and Shera, 2012). When elongated, the length of the entire cochlea is around 30 mm in length and is its widest at around 2 mm, where it is in contact with the stapes and tapers towards its apex. It is filled with fluid and divided into three main fluid chambers, as shown in Fig. 4, each separated by sensitive membranes, most notable of which the basilar membrane (Elliott and Shera, 2012). The Scala vestibuli is at the top, separated from the scala media by a thin flexible partition called Reissner’s membrane which is itself separated from the scala tympani at the bottom by a rigid partition including a more flexible section, the basilar membrane. The motion in the cochlea is driven by the middle ear, which will be discussed in greater detail later, and the difference in pressure between the upper and the lower fluid chambers drive the BM into motion (Elliott and Shera, 2012).

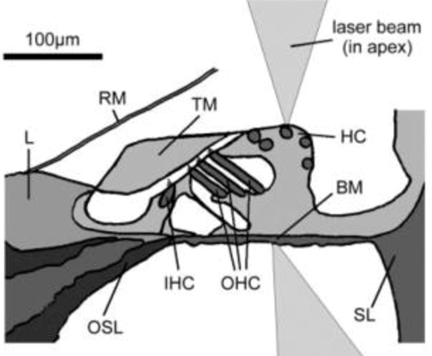

The Organ of Corti, a long fixture of sensory cells and nerve cells within the scala media is positioned right above the basilar membrane. This membrane is covered in more than 20 000 fibers that get longer and looser the further down the membrane. When the inner ear starts receiving pressure waves from the transmission of sound from the middle ear, the fibers of the basilar membrane vibrate each at different frequencies. This process is called frequency fine tuning. The shorter fibers of the basilar membrane at the base of the cochlea resonate for sounds with high frequencies while the longer hairs positioned at the apex of the cochlea vibrate at lower frequencies. The transmission of sound to the brain starts with the vibration of the fibers of the basilar membrane that stimulates the hair cells responsible for the mechanotransduction (MET) of the nearby organ of Corti. The organ of Corti contains two types of hair cells as shown in Fig. 5: inner hair cells (IHCs) and outer hair cells (OHCs) precisely adapted to the transduction of physical vibrations into an action potential signal (Puel, 1995). Each 10 μm-long cross section of the organ of Corti contains a single inner hair cell which is responsible for the conversion of the motion of the stereocilia into a chemical signal that excites adjacent nerve fibers via a ribbon synapse at the cochlear output, generating neural impulses that then pass up the auditory pathway into the brain. There are also three rows of outer hair cells within such a slice of the organ of Corti that play a more active role in the dynamics of the cochlea (Elliott and Shera, 2012). The outer hair cells contain Prestin, a piezoelectric motor protein that generates force to amplify the mechanical stimulus (Fettiplace, 2017).

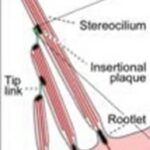

The individual stereocilia of a hair cell are arranged in a bundle, as could be seen in Fig. 5 (Elliott and Shera, 2012) and are firmly imbedded into the tectorial membrane. The stereocilia – hair bundle – of the hair cells are graded in height and are arranged in a bilateral symmetric fashion. Displacement of the hair bundle parallel to the plane towards the tallest stereocilia depolarizes the hair cell as the fine tip links that connect the individual stereocilia are put under tension and open gating channels that let charged ions from the external fluid into the stereocilia. Movements parallel to the plane toward the shortest stereocilia cause hyperpolarization. In contrast, displacements perpendicular to the plane of symmetry do not alter the hair cell’s membrane potential. The hair bundle movements at the threshold of hearing are approximately 0.3 nm, about the diameter of an atom of gold (Elliott and Shera, 2012).

The ionic composition above and below the organ of Corti are quite different. The extracellular fluids in the scala tympani and vestibuli – the perilymph that is virtually identical to plasma or cerebrospinal fluid – is low in potassium ions (K+) (4 mmol/L on average in mammals) but contains a higher concentration of Ca2+ cations (1.3 mmol/L) to support synaptic transmission and Na+ (148 mmol/L) for action potential generation (McPherson, 2018). The endolymph, the fluid in the scala media, has high concentration of the K+ ion (157 mmol/L) but low amounts of Na+ (1 mmol/L) and Ca2+ (0.02 mmol/L). There is a large voltage difference between the two fluids: with the endolymph – fluid of the scala media – being 80 to 100 mV positive to the fluid in the scala tympani (on average for mammals). This assists very greatly in the depolarization of the hair cells (McPherson, 2018).

The hair cell has a resting potential between -45 and -60 mV relative to the perilymph and about -125 mV relative to the endolymph. At the resting potential, only small fractions of the transduction channels are open (Elliott and Shera, 2012). When the hair bundle is displaced in the direction of the tallest stereo cilium, more transduction channels open, causing depolarization as K+ from the endolymph enters the cell. Depolarization in turn opens voltage-gated calcium channels in the hair cell membrane resulting in a Ca2+ influx. The current due to this ionic flow generates a voltage within the hair cell due to its internal capacitance (Elliott and Shera, 2012). In the inner hair cells, this voltage causes the cell to release a chemical neurotransmitter. When this neurotransmitter binds to receptors on nearby nerve fibers, it produces voltage changes in the fibers that, once they are above a certain threshold, trigger the nerve impulses that send signals to the brain (Elliott and Shera, 2012). Hair cells can convert the displacement of the stereociliary bundle into an electrical potential in as little as 10 microseconds. The effect of the corresponding motion-induced voltage in the outer hair cells is still being investigated in detail, but it is clear that it leads to expansions and contractions of the cell, which amplify the motion in the organ of Corti at low levels (Elliott and Shera, 2012).

Echolocation: “Seeing” with Sound

Echolocation in Nature

No matter the environment of a given species, all animals have certain tasks they must accomplish including navigation, identification and the hunt for food. However, not all animals live in the same habitats, thus, the tools used to accomplish these tasks inevitably vary. It is no surprise that humans make use of their eyes to see the world around them allowing them to navigate, identify and hunt for food. However, other environments may not have a continuous exposure to light for extended periods of the day. How are animals supposed to react when their surroundings are not characterized by a continual presence of light? The answer lies in how these species gradually turned towards alternative methods to map out the world around them. Consequently, certain animals have shifted their dependencies from seeing with their eyes to “seeing” with their ears.

In fact, there exists a narrow range of animals capable of mapping out the world around them through a process known as echolocation. Bats, dolphins, shrews, toothed whales and birds all make use of this technique to “see” the dark world around them (D’Augustino, 2018). When comparing where these previously mentioned animals live to the human habitat, it is evident why they cannot uniquely make use of their eyes to accomplish tasks vital to their survival. For instance, dolphins and whales navigate through waters that can be muddy and very dark at extreme depths (Price, 2015). Moreover, in the case of bats, echolocation allows them to fly in the darkness of the night and through the dark caves in which they live as well as to catch nocturnal insects (Price, 2015). Evidently, the somber nature of environments and lifestyles prohibit certain species from navigating primarily with their visual senses.

The way that echolocation works is quite simple in the grand scheme of things. The process can be divided into three main steps: emission, reflection and reception. First, sound pulses are produced by and emitted into the environment at varying rates with different animals capable of emitting sounds at different frequencies many of which cannot be heard by the human auditory system (Kim, 2015; Editors of Encyclopedia Britannica, “Echolocation”). Birds emit echolocation pulses at the lower end of the echolocation frequency range – around 1000 Hz – while whales and bats are capable of emitting pulses at the higher end of this range, up to over 200 000 Hz (Editors of Encyclopedia Britannica, “Echolocation”). To put these values into perspective, the human audible frequency range is bounded by values of around 20 Hz to 20 kHz (Purves et al., 2001). Second, these emitted pulses reflect off obstacles in the environment and return towards their location of emission (Kim, 2015). Third, the echoes return to the animal’s ears, allowing for analysis of these waves to be carried out (Kim, 2015). The returning soundwaves are perceived as new sounds instead of prolongations of the original waves, thus resulting in the reception of echoes (“Reflection, Refraction, and Diffraction” | the Physics Classroom, n.d.). Time between emission and return of the echoes, intensity levels of the echoes and spectral information are subject to such analysis in order for targets to be identified and localized (Kim, 2015).

Echolocation-inspired Technologies

Understanding the echolocation process in various animals such as bats and dolphins has paved the way for the creation of echolocation-inspired technologies and will continue to do so for the foreseeable future. In fact, sonar, radar and Lidar technologies all stem from a similar echolocation background.

The most relatable of these technologies is that of sonar, otherwise known as sound navigation and ranging. Such devices operate by emitting soundwave pulses that expand in cones and that will eventually encounter various objects along their path. Just like in the case of echolocation, these sound waves will reflect off such objects and travel back towards the sound-emitting device that measures how long it takes for the waves to return as well as the strength of the pulse. Stronger returned pulses point towards harder objects (“How Sonars Work: Key Aspects to Know” | Deeper Smart Sonar, n.d.). There are various types of sonar devices that exist including active sonar and passive sonar. The previous description refers to how active sonar is implemented. On the other hand, passive sonar systems do not emit their own waves, but rather detect incoming waves emitted from other sources (“What is sonar?” | National Ocean Service, n.d.). By implementing multiple passive sonar devices at once, the range of the source can be determined through triangulation (“What is sonar?” | National Ocean Service, n.d.). Sonar systems have a particular use underwater as they are used to create nautical charts, map the seafloor and locate underwater objects that can be dangerous if hit during navigation (“What is sonar?” | National Ocean Service, n.d.).

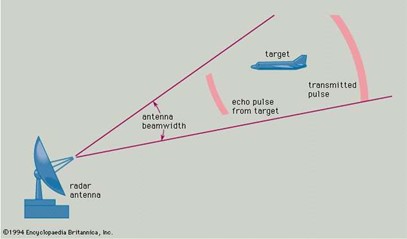

Radar systems, whose name derives from radio detection and ranging is also similar to echolocation. However, instead of emitting sound waves, this system transmits electromagnetic wave pulses and measures how long it takes for an echo to return. However, the rest of the process remains quite the same as the analyzed data can help determine size, shape, distance and speed of the target object (“Radar and Sonar” | Naval History and Heritage Command, n.d.). Because radar devices emit their own electromagnetic waves, they are considered active sensing devices (Skolnik, 2020). Such technology is actively used for air traffic control, weather observations, law enforcement, observing planets and the list goes on (Skolnik, 2020).

Finally, light detection and ranging, commonly referred to as Lidar, does not rely on sound waves or electromagnetic waves to scan the environment, but rather lasers. In fact, by emitting ultraviolet, visible or near-infrared light, Lidar scanners physically map out environments at high resolutions (Thomson, 2019). Three types of Lidar scanners exist each with their own function: topographic (map out land), bathymetric (underwater), and terrestrial (buildings, objects) (Thomson, 2019). By analyzing the time it takes for the emitted light to return, and by applying such a method for billions of data points, environments may be accurately pictured (Thomson, 2019). Such technology is currently being implemented in the latest smartphones and tablets allowing users to instantly map out the room they are in or accurately measure the length of objects around them.

Inner Ear of Bats

Hearing is an essential component of an animal’s perception of the environment, ultimately defining how effectively it can sense the looming dangers and evade the deadly risks. The remarkable sensory diversification and highly advanced auditory systems, compared to those of many other vertebrate groups, is a perfect demonstration of the evolutionary success of mammals (Davies et al., 2013). The echolocating bat is a prime example of this advantage as it relies on emitting multiple-harmonic FM sounds to interpret the echoes for an optimal flight path (Hiryu et al., 2010).

Structural Modifications in Echolocating Bats

Multidisciplinary evidence suggests that auditory adaptations in mammals can be linked to three principal adaptations: the evolution of three ossicles in the middle ears, the elongation of the basilar membrane in the cochlea which provides a supportive base for the sensory hair cells, and the evolutionary innovation of outer hair cells (Davies et al., 2013); the overall structure still resembles that of the other mammalian cochlea as is shown in Fig. 8. Furthermore, genetic studies suggest that additional molecular changes have occurred in the motor protein of the outer hair cells, known as Prestin (Zheng et al., 2000). High and low frequency sounds are perceived by the basal and upper turns of the coiled cavity respectively, which is partly achieved by a decrease in BM stiffness from base to apex (Robles and Ruggero, 2001).

Bats possess some of the widest frequency ranges of vocalizations and, therefore, assumed associated hearing sensitivities of any mammal group (Jones and Holderied, 2007). All but one, namely the Old-World fruit bats, use laryngeal echolocation for orientation, obstacle avoidance, and prey detection, which provides us with the opportunity to investigate the specific features in the cochlea that enables the detection of ultrasonic echoes (Davies et al., 2013). As stated by Kalina TJ Davies and colleagues (2013), the inner ears of laryngeal echolocating bats show several structural adaptations for detecting ultrasound echoes; their cochlea are often enlarged and contain 2.5 to 3 turns compared to only an average of 1.75 in non-echolocating fruit bats. A greatly enlarged cochlear basal turn, which allows exquisite tuning of the inner ear to the echoes of the specialized constant frequency called produced by these taxa, has also been observed.

In bats, the BM-length – ranging from 6.9 mm to approximately 16 mm – is simply tied to the body weight among different taxonomic groups since one of the largest species has the same BM-length as one of the smaller counterparts (Vater, 1988). Cochlear length seems to depend more on the type of echolocation signal used: the shortest cochlear ducts are apparently found in species emitting broadband calls while long CF-FM bats have the longest BMs as can be seen in Fig. 9 (Vater, 1988).

As in other mammals, the ratio of inner hair cells to outer hair cells is about 1:3 up to 1:4 for all bat species. Hair cell density increases slightly from base to apex with small regional maxima or minima corresponding to density changes in spiral ganglion cells (Bruns and Schmieszek, 1980). The absolute numbers of spiral ganglion cells differ widely among species, but their distribution along cochlear length is more or less uniform in species emitting FM-calls.

Regarding the hair cell stereo cilia and their contact with the tectorial membrane for horseshoe bats, receptor cell arrangement, stereo cilia or contact with tectorial membrane are similar to that described in other mammals in cochlear regions apical to the discontinuity of BM-thickness and -width (Bruns and Goldbach, 1980). However, several specializations could be observed in the basal half turn. Stereocilia on the outer hair cells are unusually short, the first row of the outer hair cells is widely separated from the outer two rows, and the inner hair cell stereo cilia are twice as long as in other mammals or other turns (Vater, 1988). The attachment of stereo cilia to the tectorial membrane is peculiar in the basal half turn, the bottom side of which has an unusual continuous zig zag elevation containing imprints of the innermost row of outer hair cells. It has been proposed that, in middle and apical turns of the horseshoe bat cochlea, both the IHC and OHC stereo cilia are attached to the tectorial membrane and are excited by shearing motion whereas in the basal half turn only the OHC stereo cilia are sheared and the IHC stereo cilia are moved by a subtectorial fluid motion component (Vater, 1988).

Operation of the Cochlea

The mechanical response of the cochlea provides the basis of sensitive and selective hearing (Elliott and Shera, 2012). Environmental sounds stimulate the cochlea via vibrations of the stapes, the innermost of the middle ear ossicles (Robles and Ruggero, 2001). These vibrations produce displacement waves that travel on the elongated and spirally wound basilar membrane, or BM. As they travel, the waves grow in amplitude, reaching a maximum and then dying out. The location of maximum BM motion is a function of stimulus frequency with high-frequency waves being localized to the base of the cochlea – near the stapes – and low frequency waves approaching the apex of the bat cochlea. Consequently, each cochlear site has a characteristic frequency to which it responds maximally (Robles and Ruggero, 2001). For the echolocating bats, such frequencies are especially high, enabling them to detect the echoes of their emitted FM sounds.

BM vibrations produce motion of hair cell stereocilia, which leads to the generation of hair cell receptor potentials and the excitation of afferent auditory nerve fibers. At the base of the cochlea, BM motion exhibits a CF-specific and level dependent compressive nonlinearity, which makes the responses to low-level, near-CF stimuli sensitive and sharply frequency-tuned while the responses to intense stimuli are insensitive and poorly tuned (Robles and Ruggero, 2001).

The high sensitivity and sharp-frequency tuning are highly labile, which is indicative of the presence of a positive feedback from the organ of Corti, the cochlear amplifier, in a normal cochlea as is shown in Fig. 10. This mechanism involves forces generated by the outer hair cells and controlled, directly or indirectly, by their transduction currents (Robles and Ruggero, 2001).

The stereo cilia bundles are then deflected due to the BM vibrations, opening gating channels that let charged ions from the external fluid into the stereocilia and hence the hair cells, generating a voltage (Elliott and Shera, 2012). The cell releases a neurotransmitter which binds to receptors on nearby nerve fibers, producing voltage changes in the fibers that, once they are above a certain threshold, trigger the nerve impulses that send signals to the brain. These neural signals are then interpreted by the temporal lobe in the bat brain, thus enabling these creatures of shadow to perceive the world through a labyrinth of sounds (Elliott and Shera, 2012).

Humans and Echolocation

Unlike bats, humans do not use echolocation to perceive the world around them. This comes as no surprise as humans primarily use their eyes to see the world around them that is, for the most part, light enough for vision to be effective. This works out well as the human auditory system is not made to use echolocation to map out environments. In fact, a major problem that humans would encounter if they were to attempt to use echolocation is described by the precedence effect.

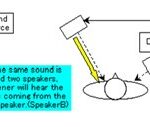

The precedence effect is an observation describing how two binaural sounds – separated by a small delay – are not perceived as two distinct sounds, but rather a single one (Zurek, 1987). Based off the previous explanations that were given on how echolocation works, a major problem arises. According to this definition of the precedence effect, it is implied that humans are not able to identify reflecting soundwave echoes as new sounds for everything will rather be heard as a single auditory event (Zurek, 1987). Over the years, various experiments testing the precedence effect and how it plays a role in sound localization have been performed. Such experiments often make use of two speakers and can be described by Fig. 11. Essentially, when sound is emitted from speakers at separate distances without any emission delays, the sound appears to be coming from the closer speaker as its soundwaves will arrive first. However, when an emission delay slightly prevents sound from the closer speaker to arrive first, the sounds are perceived to be arriving from the farther speaker (Doyle, n.d.).

Experiments have even been able to demonstrate how mechanisms in the brain contribute to the precedence effect. Hartung and Trahiotis (2001) were in fact able to observe how the auditory nerve responds with greater intensity to initial sounds as opposed to sounds arriving within a certain delay of the original onset.

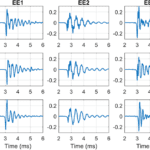

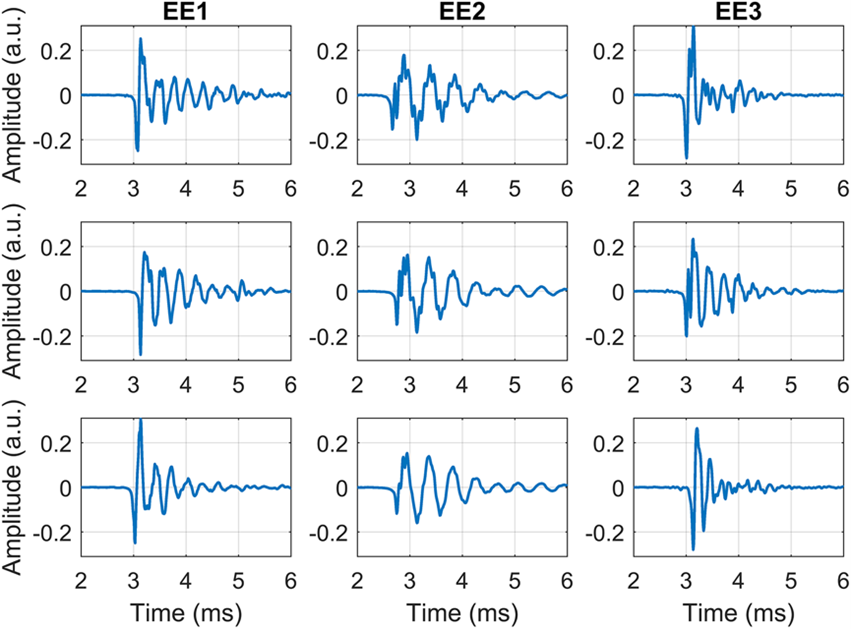

Although human echolocation is not easily achieved for most humans, practice and rather extreme circumstances can enhance one’s ability to use echolocation – perhaps not to the level of a bat, but far beyond what the average human will ever achieve. – In fact, evidence has shown that blind people have been able to enhance their echolocating skills, demonstrating how the human brain can adapt (Servick, 2019). Indeed, our world is home to some expert-level echolocating humans who have allowed scientists to conduct multiple studies on their craft. Research has shown that these expert echolocators make clicks with their mouths that last about 3 ms as displayed in Fig. 12 and range from 2 to 4 kHz (Thaler et al., 2017). The short duration of the sound emission could possibly help these echolocating humans from problems related to overlapping echoes and the frequency of these clicks could help them better direct their clicks in the environment (Thaler et al., 2017).

Moreover, further studies have attempted to demonstrate what regions of the human brain are involved when these expert echolocators. Similarly to how visual stimulus in humans leads to activity in the primary visual cortex of the brain, echolocating humans also experience stimulation in the primary visual cortex when processing echoes (Servick, 2019). Thus, it appears that the visual cortex can be used for spatial mapping purposes not necessarily related to vision at all but other senses – like sound – as well, and that the brain is subject to a certain neural flexibility (Servick, 2019).

No matter how extraordinary such research may be with regards to such uncharted territory, nothing can compare to hearing from one of these expert echolocators themselves how they make use of their abilities in everyday life and how they have not let such obstacles, often present from relatively early on in their lives, prevent them from living and experiencing visual images of the world around them. For example, Daniel Kish, a perceptual navigation specialist, shows how a form of echolocation has helped him deal with the blindness he has been living with since the age of 13 months (refer to Kish, 2015 in Appendix).

Conclusion

The above investigation on the organ of the ear from a biomolecular, cellular and tissue engineering perspective reveals just how complex auditory systems can be. Traveling deeper and deeper into the organ where the inner ear is discovered further exposes and truly unravels the complex nature of the ear. It is through the study of increasingly complex auditory systems that future experiments and analysis can be performed to enhance the way humans interact with their own auditory environment and how humans can gather inspiration in the development of future technologies. Thus, one question remains: In what direction is humanity heading as knowledge on echolocating auditory systems and the human brain’s neural flexibility increase?

The truth of the matter is that, to make any significant advancements in such areas like the ones surrounding echolocating humans, a lot more research must be done as there is a lot that remains unknown. In fact, most studies that have been performed to date, like the ones that have been mentioned above, succeeded in analyzing data of these expert echolocators, but nobody has been able to accurately describe how the complete process works. Now that it is known that such capabilities exist, within certain limitations, in the human brain and auditory system, extensive work must be put into better understanding what makes these supernatural-esque actions possible in the human body. The future sounds promising as it appears to be only a matter of time before people like Daniel Kish have minimal limitations as they seek to explore every single inch of the world around them, an ability that far too many take for granted.

Appendix

Kish, Daniel. “How I use sonar to navigate the world.”, 2015, TED, https://www.ted.com/talks/daniel_kish_how_i_use_sonar_to_navigate_the_world?language=en#t-1527.

References

Abdalla, M. K. T. M. (2017). Applied Basic Science of the Auricular Cartilage. In A. R. Zorzi & J. Batista de Miranda (Eds.), Cartilage Repair and Regeneration. doi:10.5772/intechopen.72479

Britannica, E. o. E. (2017, 27 Feb 2018). Echolocation. Retrieved from https://www.britannica.com/science/echolocation

Bruns, V., & Goldbach, M. (1980). Hair cells and tectorial membrane in the cochlea of the greater horseshoe bat. Anatomy and Embryology, 161(1), 51-63. doi:10.1007/bf00304668

Bruns, V., & Schmieszek, E. (1980). Cochlear innervation in the greater horseshoe bat: demonstration of an acoustic fovea. Hearing Research, 3(1), 27-43. doi:10.1016/0378-5955(80)90006-4

Classroom, t. P. (n.d.). Reflection, Refraction, and Diffraction. Retrieved from https://www.physicsclassroom.com/class/sound/Lesson-3/Reflection,-Refraction,-and-Diffraction

Command, N. H. a. H. (n.d.). Radar and Sonar. Retrieved from https://www.history.navy.mil/browse-by-topic/exploration-and-innovation/radar-sonar.html

D’Augustino, T. (2018). Exploring our world: How do animals use sound? Retrieved from https://www.canr.msu.edu/news/exploring_our_world_how_do_animals_use_sound

Davies, K. T. J., Maryanto, I., & Rossiter, S. J. (2013). Evolutionary origins of ultrasonic hearing and laryngeal echolocation in bats inferred from morphological analyses of the inner ear. Frontiers in Zoology, 10(1), 2. doi:10.1186/1742-9994-10-2

Doyle, K. (n.d.). Early Reflections In An Enclosed Environment. Retrieved from http://www.kevindoylemusic.com/education_reflections/

Elliott, S. J., & Shera, C. A. (2012). The cochlea as a smart structure. Smart Materials and Structures, 21(6), 064001. doi:10.1088/0964-1726/21/6/064001

Epomedicine. (2013). Applied Anatomy of Tympanic Membrane. Retrieved from https://epomedicine.com/medical-students/applied-anatomy-of-tympanic-membrane/

Facility, W. C. (2015). Big Brown Bat Cochlea and Ossicles 0002. Retrieved from https://csi.whoi.edu/innerears/big-brown-bat-0002jpg/index.html

Fettiplace, R. Hair Cell Transduction, Tuning, and Synaptic Transmission in the Mammalian Cochlea. In Comprehensive Physiology (pp. 1197-1227).

Guelke, R., & Keen, J. A. (1952). A study of the movements of the auditory ossicles under stroboscopic illumination. The Journal of Physiology, 116(2), 175-188. doi:https://doi.org/10.1113/jphysiol.1952.sp004698

Hartung, K., & Trahiotis, C. (2001). Peripheral auditory processing and investigations of the “precedence effect” which utilize successive transient stimuli. The Journal of the Acoustical Society of America, 110(3), 1505-1513. doi:10.1121/1.1390339

Hawkins, J. E. (2020, 29 Oct 2020). Human ear. Retrieved from https://www.britannica.com/science/ear

Hiryu, S., Bates, M. E., Simmons, J. A., & Riquimaroux, H. (2010). FM echolocating bats shift frequencies to avoid broadcast–echo ambiguity in clutter. Proceedings of the National Academy of Sciences, 107(15), 7048-7053. Retrieved from https://www.pnas.org/content/pnas/107/15/7048.full.pdf

Jones, G., & Holderied, M. W. (2007). Bat echolocation calls: adaptation and convergent evolution. Proceedings of the Royal Society B: Biological Sciences, 274(1612), 905-912. doi:doi:10.1098/rspb.2006.0200

Kim, S. (2015). 7 – Bio-inspired engineered sonar systems based on the understanding of bat echolocation. In T. D. Ngo (Ed.), Biomimetic Technologies (pp. 141-160): Woodhead Publishing.

Lim, D. J. (1970). Human Tympanic Membrane. Acta Oto-Laryngologica, 70(3), 176-186. doi:10.3109/00016487009181875

Marabisa, J. (2020, 9 Sep 2019). Sonar System Using Arduino and Altair Embed. Retrieved from https://altairuniversity.com/45471-sonar-system-using-arduino-block-diagram-model/

McPherson, D. R. (2018). Sensory Hair Cells: An Introduction to Structure and Physiology. Integr Comp Biol, 58(2), 282-300. doi:10.1093/icb/icy064

Price, J. (2015). What is echolocation and which animals use it? Retrieved from https://www.discoverwildlife.com/animal-facts/what-is-echolocation/

Puel, J. L. (1995). Chemical synaptic transmission in the cochlea. Progress in Neurobiology, 47(6), 449-476. doi:10.1016/0301-0082(95)00028-3

Purves, D., Augustine, G., & Fitzpatrick, D. (2001). The Audible Spectrum. In Neuroscience (2 ed.): Sinauer Associates.

Robles, L., & Ruggero, M. A. (2001). Mechanics of the Mammalian Cochlea. Physiological Reviews, 81(3), 1305-1352. doi:10.1152/physrev.2001.81.3.1305

Service, N. O. (n.d.). What is sonar? Retrieved from https://oceanservice.noaa.gov/facts/sonar.html

Servick, K. (2019). Echolocation in blind people reveals the brain’s adaptive powers. Retrieved from https://www.sciencemag.org/news/2019/10/echolocation-blind-people-reveals-brain-s-adaptive-powers

Skolnik, M. I. (2020, 18 Nov 2020). Radar. Retrieved from https://www.britannica.com/technology/radar

Sonar, D. S. (n.d.). How Sonars Work: Key Aspects to Know. Retrieved from https://deepersonar.com/us/en_us/how-it-works/how-sonars-work

Team, H. E. (2015, 13 Jan 2015). Auricular cartilage. Retrieved from https://www.healthline.com/human-body-maps/auricular-cartilage#1

Thaler, L., Reich, G. M., Zhang, X., Wang, D., Smith, G. E., Tao, Z., . . . Antoniou, M. (2017). Mouth-clicks used by blind expert human echolocators – signal description and model based signal synthesis. PLOS Computational Biology, 13(8), e1005670. doi:10.1371/journal.pcbi.1005670

Thomson, C. (2019). How Does LiDAR work? Laser Scanners Explained. Retrieved from https://info.vercator.com/blog/how-does-lidar-work-laser-scanners-explained

Vater, M. (1988). Cochlear Physiology and Anatomy in Bats. In P. E. Nachtigall & P. W. B. Moore (Eds.), Animal Sonar: Processes and Performance (pp. 225-241). Boston, MA: Springer US.

Vetter, D. E. (2008). How do the hammer, anvil and stirrup bones amplify sound into the inner ear? Retrieved from https://www.scientificamerican.com/article/experts-how-do-the-hammer-anvil-a/

Zheng, J., Shen, W., He, D. Z. Z., Long, K. B., Madison, L. D., & Dallos, P. (2000). Prestin is the motor protein of cochlear outer hair cells. Nature, 405(6783), 149-155. doi:10.1038/35012009

Zurek, P. M. (1987). The Precedence Effect. In W. A. Yost & G. Gourevitch (Eds.), Directional Hearing (pp. 85-105). New York, NY: Springer US. doi: https://doi.org/10.1007/978-1-4612-4738-8_4